Why evaluate student success

interventions?

- Evaluation outcomes provide an assessment tool for academic schools to know the extent to which their interventions are effective and are having a positive impact on students’ achievement. It is also a tool for schools to explore avenues for student support, or for staff engagement in Equality Diversity and Inclusion activities. The impact of these interventions will subsequently result in a reduction of our institutional gaps.

- The OfS Regulatory Framework requires institutions to provide evidence of impact on the delivery of the Access and Participation Plan (APP) through evaluation, and in line with the standards of evidence guide provided by the OfS.

- To meet the institutional principles of accountability, good practice and value for money, this evaluation framework provides a tool to identify interventions which are most effective in reducing attainment differentials, thus leading to a more agile mechanism to meet OfS targets, a faster reduction in gaps, and improved equality of opportunity for students.

How do we evaluate?

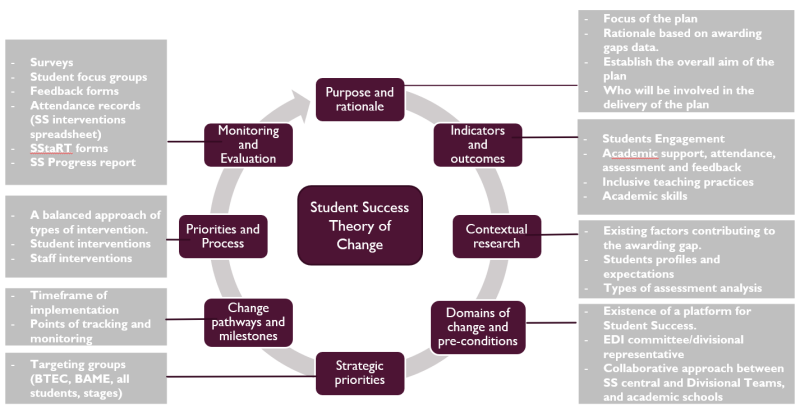

The Student Success evaluation framework is informed by Theory of Change (ToC), as an approach to programme planning and evaluation.

For programme planning, the Student Success ToC provides a tool and road map for academic schools and divisions to:

- define the purpose and rationale of their interventions and activities

- establish how the data on awarding and continuation gaps will inform decisions around targets and expected outcomes

- identify the domains of change and pre-conditions necessary to effectively develop the programme

- outline the strategic priorities in terms of type of interventions and target groups of students within socio-demographic characteristics, stages, and evaluation outcomes.

For programme evaluation, the Student Success ToC includes two types of evaluation that are interlinked, process evaluation and impact evaluation.

Process Evaluation allows us to understand how interventions have been implemented and delivered, and identify the extent to which such a process has been effective in achieving the expected outcome of the interventions. Through the data monitoring of student and staff engagement in these interventions, case studies, and feedback analysis, we can establish how effective they have been in targeting students, and how the intervention has contributed to changing behaviours and attitudes for institutional change. In terms of the OfS standards of evidence this type of evaluation is defined as Type 1 Narrative.

Impact evaluation provides a methodology to establish what difference the intervention made and to what extent the intervention outcomes can contribute to students' improvement in attainment or continuation. This type of evaluation is considered by the OfS as the Type 2 Empirical Evidence of impact evaluation.

We begin the impact evaluation by establishing intervention groupings, this gives us a much better chance of having a population that we are comfortable drawing conclusions from. There are broad groupings and granular groupings in the Student Success Evaluation Framework, such as skills workshops being a granular, but skills interventions as a whole being broad. By calculating the students’ change in overall average between two data points we can then examine where attendance at an intervention correlated with a higher increase in average. Outliers (data points that do not follow the behaviour of the rest of the group) are removed prior to this analysis taking place and we account for the fact that, for example, different subject areas are likely to have a different distribution of the changes in averages.

The methodology has developed over time as we refine and make the statistical analysis more robust. We justify the implementation of different statistical techniques by examining the distributions and clarify our reason for removing data that we identify as an outlier.

Once we have a clean dataset of non-outlier data, we move to categorise intervention codes which align to a statistically significant improvement on students’ attainment or attendance, and these are then subject to contribution analysis. Once the contribution analysis has been completed in line with the Theory of Change model, we can establish which types of intervention have enough evidence to have a chain of causality that links them to improved attendance or attainment.