Mathematics, Data Science and Actuarial Science

Dental patient system wins Start Up Final. Mathematics, Statistics and Actuarial Science student Seth Mashate was declared winner for his business 'Data Ravens', a patient management system to optimise business performance in dental practices.

Chloe's Story

The Year in Industry was particularly crucial, but I also learnt how important clear communication is.

Chloe Fazackerley, Actuarial Science graduate

Our research community

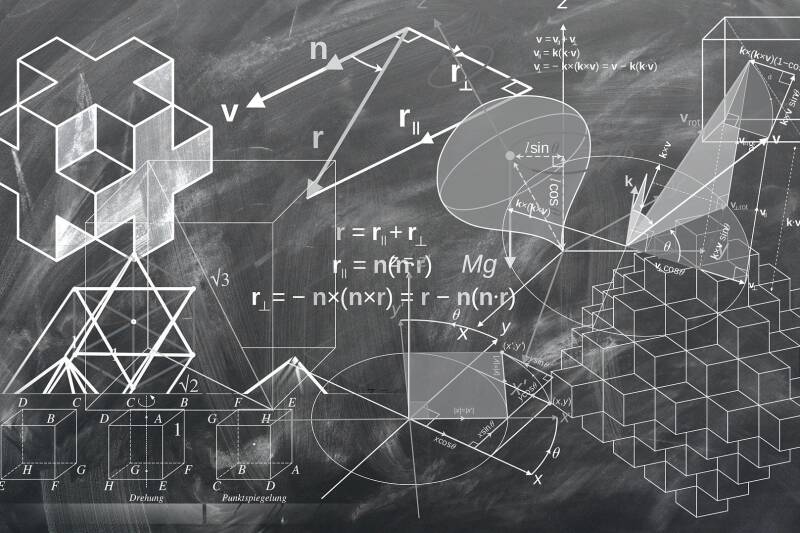

Rogue waves, tsunamis and solitons

Our researchers are some of the most influential thinkers in their respective fields and their research informs their teaching. Professor Peter Clarkson discusses applying non-linear differential equations to wave phenomena.